Synopsis

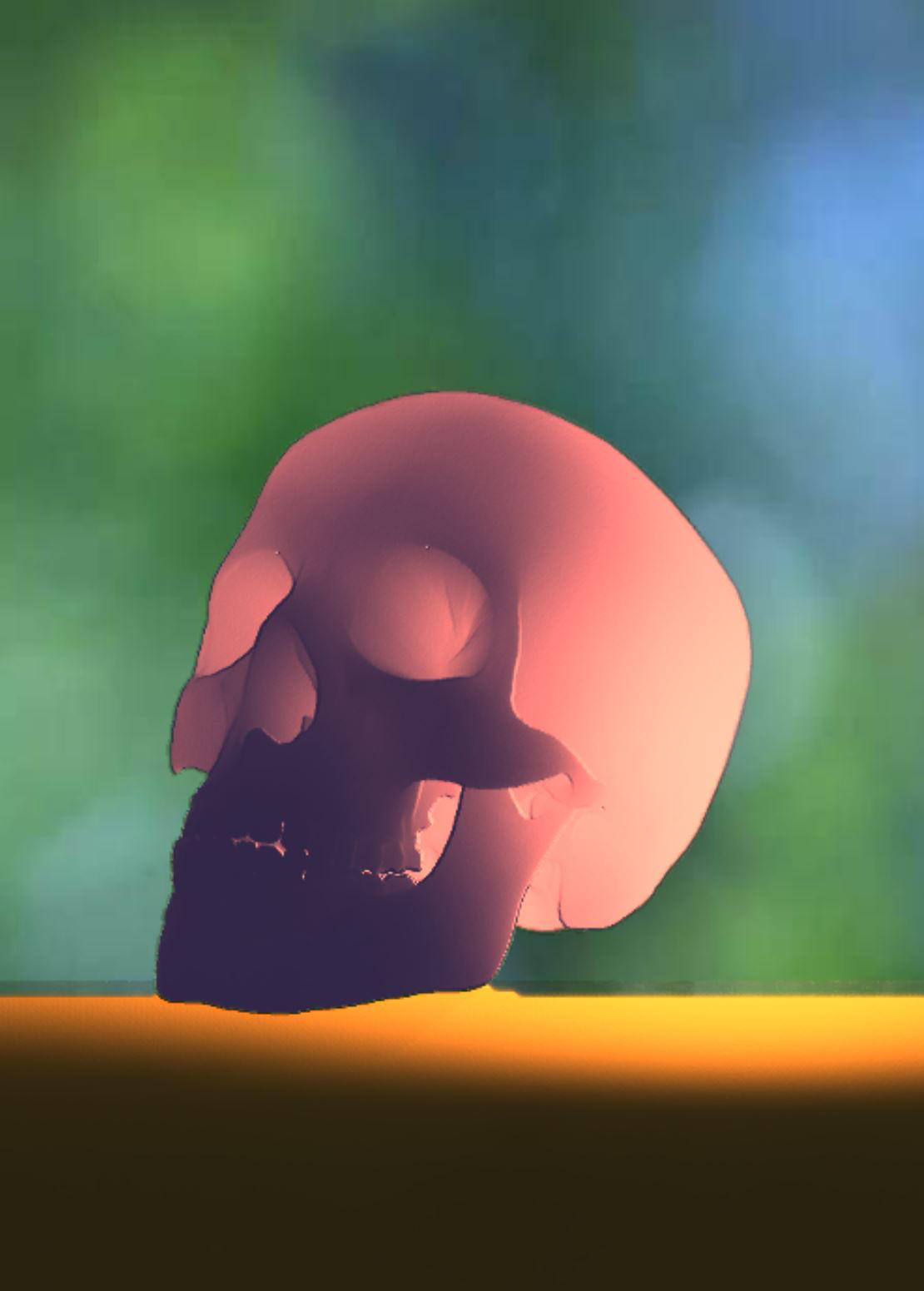

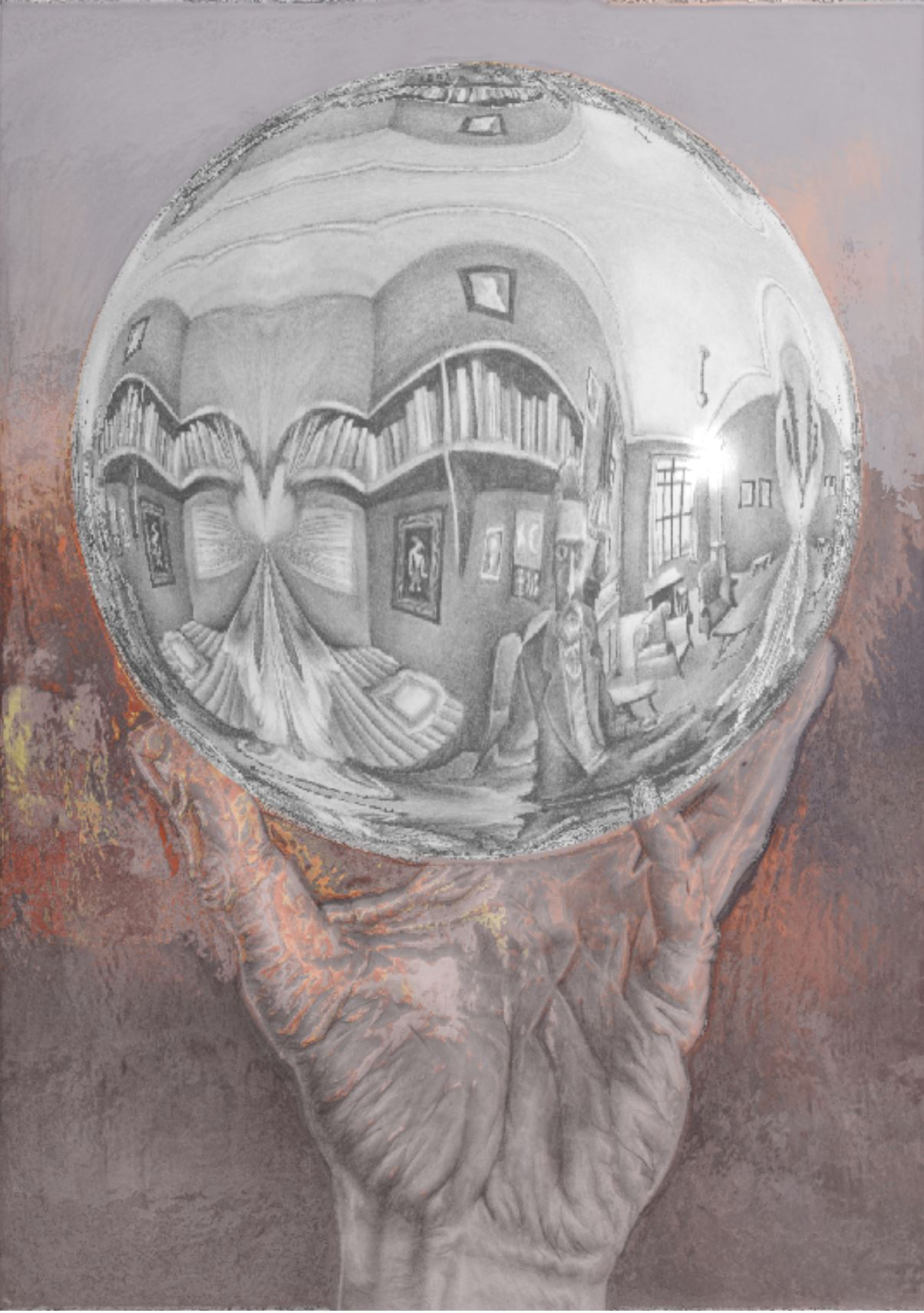

We have developed a system that lets anybody recreate any existing painting into stylized dynamic artwork with art-directed controls for various

global illumination effects such as reflection, refraction, Fresnel, shadows, and sub-surface scattering. Our system takes in information provided

primarily through a normal map or a depth map, minimally hand-painted textures, and renders physically plausible illumination with complete style control.

Introduction

We develop a system to create dynamic paintings that can be re-rendered interactively in real-time in web.

Using this system, any existing painting can be turned into an interactive web-based dynamic art work.

Our interactive system provides most global illumination effects such as reflection, refraction, shadow, and subsurface scattering by processing images.

In our system, the scene is defined only by a set of images. In current implementation,

these includes (1) a shape image, (2) two diffuse images, (3) one background image,

(4) one foreground image, and (5) one transparency image.

Shape image is either a normal or a depth map. Two diffuse images are usually hand-painted.

They are interpolated using illumination information. The transparency image is used to define the transparent and

reflective regions that can reflect foreground image and refract background image, which are also hand-drawn.

This framework that mainly uses hand-drawn images provides qualitatively convincing painterly global illumination effects such as reflection and refraction.

This system is a result of a long line of research contributions on Barycentric Shading using Mock3D-shapes such as Normal Maps and Height Fields (See

Siggraph blog for a short description)

Mock 3D shapes are proxy shapes with embedded perspective information. They can also be called anamorphic bas-reliefs. They are capable of representing impossible shapes.

We also include parameters to provide additional artistic controls. For instance, using our piece-wise linear Fresnel function it is possible to control

the ratio of reflection and refraction by providinf physically plausible compositing of reflection and refraction with artistic control.

The art-directed warping equations that provides qualitatively convincing refraction and reflection effects with linearized artistic control.

Mock-3D Rendering Pipeline

The main problem with existing non-photorealistic or photorealistic rendering methods is

that they are still based on the standard computer graphics pipeline. There is still need for a modeling and animation software

such as Maya or Blender or our own standalone modeling software to represent scenes as a 3D proxy geometry.

Moreover, there is also need a rendering software such as Renderman, Arnold, or our own.

The problem is even harder for expressive depiction. One major problem with this approach the rendering process in such a graphics pipeline

takes significant amount of time since it generally involves a great deal of integration of the a variety of software platforms.

There is definitely a need for reducing the computation time to obtain global illumination with style control. In addition,

the classical graphics pipeline requires a significant amount of 3d expertise. There is a need for an alternative approach for the

people who do not want to deal with these complex tools and 3D shapes, but still want to obtain global illumination with complete control on visual styles.

In this case, we present a completely different approach for rendering pipeline. All information from shapes

to materials are provided by images. Shapes are defined either by normal maps or depth maps, which we call mock3D shapes.

Shading parameters are also provided by a set of control images. Using these images we can obtain physically

plausible local and global illumination with complete style control. There already exist solutions to obtain diffuse reflection,

shadows, reflection and refraction with a set of images. Use of Normal Maps to obtain painterly results with global illumination

started with Ph.D. thesis of Youyou Wang [1] and

Ozgur Gonen [2]. We introduced

vector field or gradient domain rendering during that period [3]

to obtain global illumination with normal maps (See also this this report .

We later introduced with Fermi Perumal's M.S. thesis, a method to obtain convincing global illumination

with depth maps by extending the original ideas used in gradient domain rendering [4] .

Barycentric Shaders

The heart of our rendering pipeline is Barycentric shading that can allow complete control of visual results allowing any photo-realistic or non-photorealistic style

[5] . Barycentric shading can also be used in classical computer graphics pipeline using real 3D proxy models.

To demonstrate a wide range of artistic works can be created using

Barycentric shading, several M.S. thesis or Capstone project in Visualization at Texas A&M University are produced [6,7,8,9,10,11,12].

Barycentric shading is closely related Barycentric methods in Computer Aided Geometric Design (CAGD).

The main difference is that our control points are not ``spatial positions'' as in CAGD applications. Instead,

in Barycentric shading control points are images, which we call ``control textures''. We create renderings based on

Barycentric interpolations of ``control textures''

The heart of our rendering pipeline is Barycentric shading that can allow complete control of visual results allowing any photo-realistic or non-photorealistic style

[5] . Barycentric shading can also be used in classical computer graphics pipeline using real 3D proxy models.

To demonstrate a wide range of artistic works can be created using

Barycentric shading, several M.S. thesis or Capstone project in Visualization at Texas A&M University are produced [6,7,8,9,10,11,12].

Barycentric shading is closely related Barycentric methods in Computer Aided Geometric Design (CAGD).

The main difference is that our control points are not ``spatial positions'' as in CAGD applications. Instead,

in Barycentric shading control points are images, which we call ``control textures''. We create renderings based on

Barycentric interpolations of ``control textures''

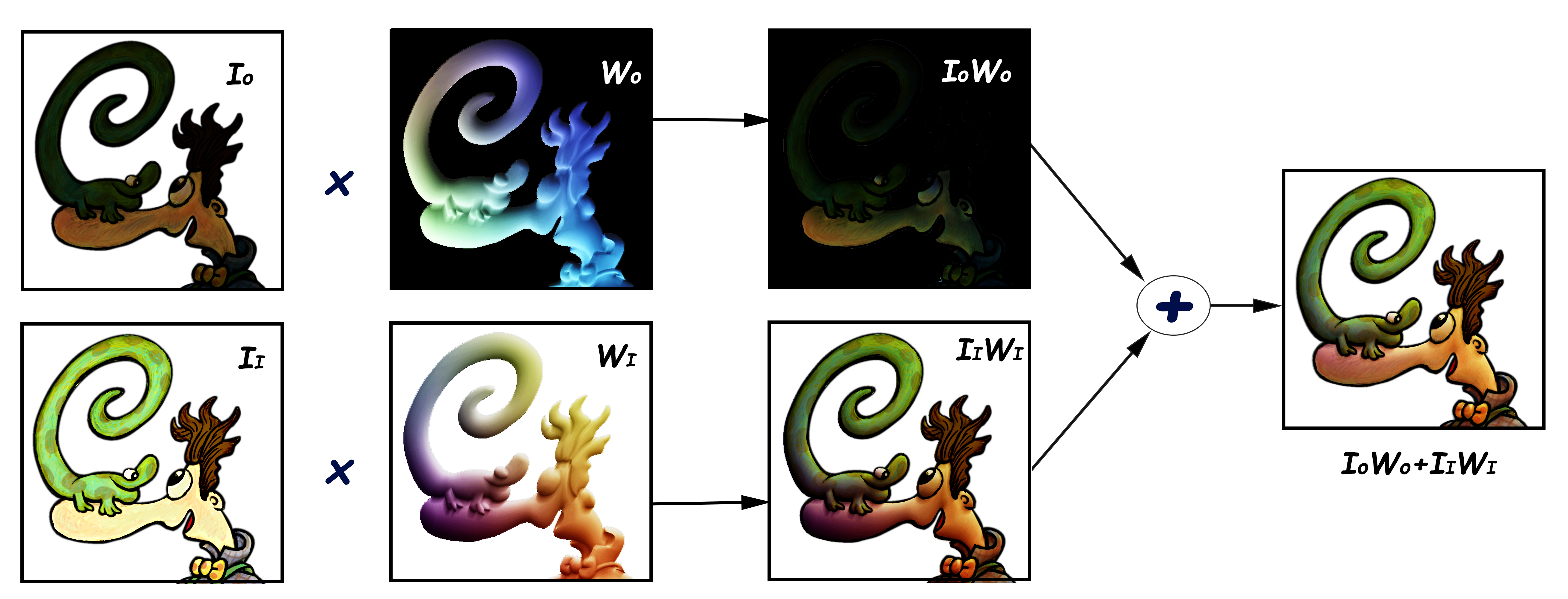

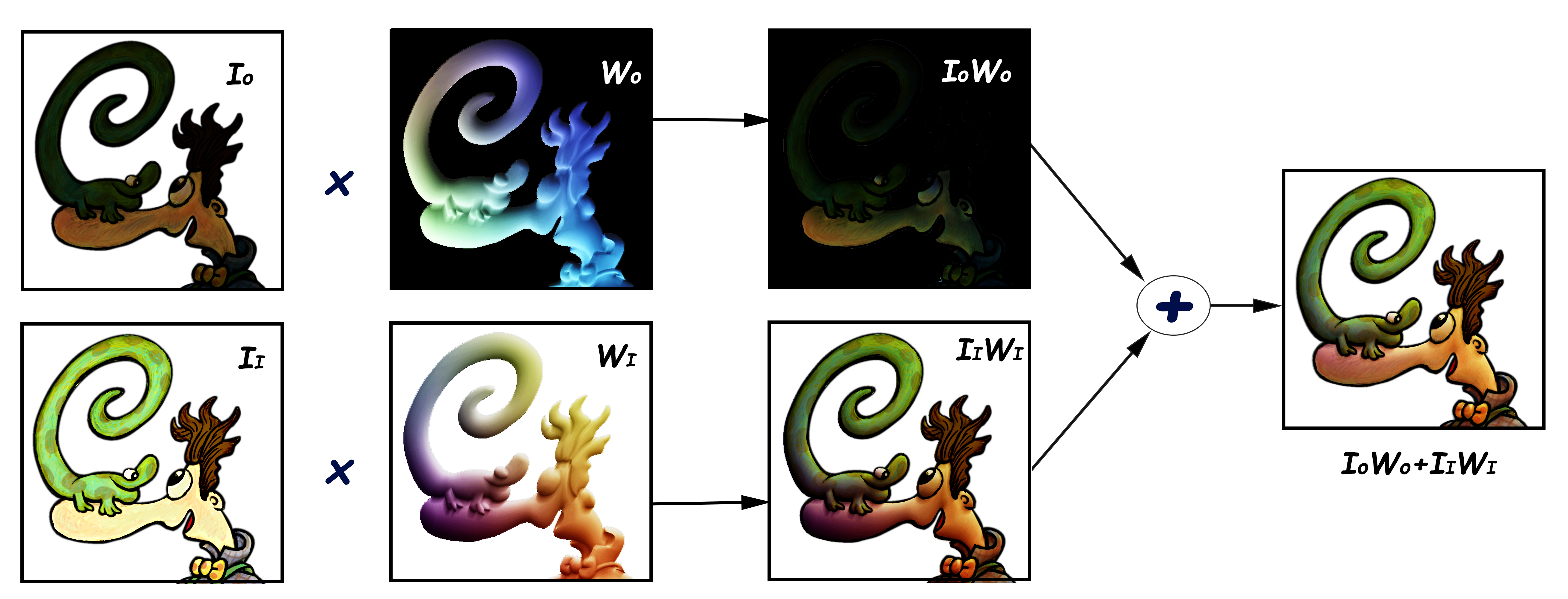

An important property of Barycentric shading is that it provides a wide variety of styles using different Barycentric controls.

The diffuse shader of the current web implementation is based on the simplest (affine)

Bezier form with Basis functions t and (1-t), where t is the percentage of light that can reach a given pixel region.

This affine Bezier formulation requires only two control textures and rendering simply becomes

an interpolation of two texture images I0 and I1 as I0 (1-t) + I1 t as shown in the image at the right.

Comparing to the diffuse part of the standard SVBRDF models, we represent the diffuse term with two

control images instead of one. In standard SVBRDF models, control texture that corresponds to (1-t) term, I0, is missing, which is equivalent to use black

image as I0 in our case. An important implication having the control texture, I0, that corresponds into (1-t) term, we can include higher order effects embedded into image, which is

corresponds to ambient term. This additional term allows us to obtain artistic look. The same model can also be used to obtain photorealistic results

by considering I0 as ambient illumination existed in the scene.

The other type of diffuse shaders is necessary to obtain different styles. For instance, charcoal, crosshatching and chinese painting requires [6,7,8]

zero degree B-Splines to obtain sharp changes. In charcoal and crosshatching, the number of control textures can be high.

Similarly, we found out that to obtain Georgia O Keeffe style, we need at least cubic Bezier curve, which requires four control textures [13].

If we want to control both light position and time of the day, we used a bilinear Bezier form with Basis functions st, (1-s)t, s(1-t) and (1-s)(1-t),

where s is normalised time of the day and t is t=0.5 cos &Theta + 0.5. We used this approach to obtain dynamic versions of Edgar Payne and Anne Garney paintings [10,11].

Adding global illumination terms requires to use higher domensional Barycentric formula. In this case, we interpolate warped background and warped environment images

using Fresnel, which is actually another afine Bezier term. Then we combine the results with diffuse based on material properties.

Computation of t value

For normal maps, we use t=0.5 cos Θ + 0.5 for normal maps. Note that this is the term from Gooch & Gooch shading.

It is also possible to use the classical t=max(cos Θ 0), but it gives flat regions in in areas cos Θ<0.

Moeover, t has three channels as shown in the image. That helps to use colorful lights.

For depth maps, t is computed using our own integrated d/R illumination model for depth maps that can produce direct illumination, subsurface scattering and

shadow by processing the depth image [4].

Mock3D Geographic Visualization System

Mock3D rendering pipeline is not just for artistic application. It can be useful for a wide variety of scinetific applications

by providing an easy way to obtain high quality interactive visualizations of complex data.

One such example is the Geographic visualization. In this direction, our goal is to obtain aestetically rich rendering of maps that are similar to

Vintage Relief Maps by Sean Conway.

We observed that there is already a rich data in GIS (Geographic Information Systems) that can directly be

used in our Mock3D pipeline to render such relief maps. For instance, elevation data can be used as depth map, landcover data

can be used to obtain disffuse materials. Additional operations on images can help to produce maps that are easy to understand

similar to Vintage Relief Maps.

The heart of our rendering pipeline is Barycentric shading that can allow complete control of visual results allowing any photo-realistic or non-photorealistic style

The heart of our rendering pipeline is Barycentric shading that can allow complete control of visual results allowing any photo-realistic or non-photorealistic style